Kubernetes Provider

Self-hosted Kubernetes Runner Pools enable you to dynamically provision Runners to run Factory pipelines on any Kubernetes cluster. The Runner Pool is packaged as an executable that is deployed in a container. The Runner Pool orchestrates the provisioning and deprovisioning of runner containers on the same Kubernetes Cluster.

Requirements

- A Kubernetes cluster

helmCLI configured to run commands against the Kubernetes clusterkubectlCLI configured to run commands against the Kubernetes cluster

Retrieve the Runner Pool Helm Chart

Follow these steps to retrieve the Runner Pool Helm Chart

- Visit the Factory Releases page

- Choose which release of

runner-poolto deploy - Expand the Assets for that release

- Click the Runner Pool Helm Chart link to begin the download of a archive containing the helm chart

- Extract the contents of the archive. See the example below:

tar -xvzf "/runway/factory-rm-0.135.1.tgz" -C "/runway/runner-pool-helm"

Kubernetes Provider Configuration

Below are the configuration options specific to the Kubernetes Provider with the default values shown.

{

"RUNNER_K8S_IMAGE_REGISTRY": "864584331736.dkr.ecr.us-west-2.amazonaws.com",

"RUNNER_K8S_IMAGE_REPOSITORY": "sophos/fac-runner",

"RUNNER_K8S_IMAGE_TAG": "latest",

"RUNNER_K8S_NAMESPACE": "${ prefix }-${ env }-runner",

"RUNNER_K8S_CPU_REQUEST": "",

"RUNNER_K8S_CPU_LIMIT": "",

"RUNNER_K8S_MEM_REQUEST": "",

"RUNNER_K8S_MEM_LIMIT": ""

}

RUNNER_K8S_IMAGE_REGISTRY: Location of the container registry.RUNNER_K8S_IMAGE_REPOSITORY: Name of container repository.RUNNER_K8S_IMAGE_TAG: Container tag to use.RUNNER_K8S_NAMESPACE: Namespace to deploy Runners to.RUNNER_K8S_CPU_REQUEST: CPU units to request for Runner pod.RUNNER_K8S_CPU_LIMIT: CPU units to limit Runner pod to.RUNNER_K8S_MEM_REQUEST: Memory units to request for Runner pod.RUNNER_K8S_MEM_LIMIT: Memory units to limit for Runner Pod.

Create an Helm --values file

Create a yaml file containing the above configuration options tailored to your environment. This file path will be passed to the helm --values parameter later. An example values.yaml file can be found with the extracted archive content from earlier, or see below for an example values.yaml file that contains the minimum configuration options:

---

global:

agentApiFqdn: agent-api.factory.sophos.com

runnerManager:

name: kubernetes-runner-pool

serviceAccount:

create: true

name: runner-pool-service-account

k8sRunner:

create: true

secrets:

RUNNER_MANAGER_KEY: "<YourRunnerManagerKeyHere>"

config:

ENVIRONMENT: alpha

RUNNER_MANAGER_ID: "<YourRunnerManagerIdHere>"

RUNNER_K8S_NAMESPACE: runner-pool

RUNNER_K8S_CPU_REQUEST: 400m

RUNNER_K8S_CPU_LIMIT: 400m

RUNNER_K8S_MEM_REQUEST: 500Mi

RUNNER_K8S_MEM_LIMIT: 500Mi

NOTE See Self Hosted Runner Authentication to help locate the

RUNNER_MANAGER_IDandRUNNER_MANAGER_KEY

Install the Runner Pool Container

The following helm install command is an example of how to install the runner-pool container in your kubernetes cluster.

helm install my-release \

/runway/runner-pool-helm/factory-rm-0.135.1 \

--create-namespace \

--namespace runner-pool \

--values /runway/runner-pool-helm/custom.yaml \

--debug \

--wait

Here is an explanation of each component of the command:

helm install <name> <chart>: Install the chart with a name--create-namespace: create the release namespace if not present--namespace: namespace scope for this request--values: specify values in a YAML file or a URL (can specify multiple)--debug: enable verbose output--wait: if set, will wait until all Pods, PVCs, Services, and minimum number of Pods of a Deployment, StatefulSet, or ReplicaSet are in a ready state before marking the release as successful. It will wait for as long as –timeout

Validate the Installation

Once you have executed the helm install command, you will see console output similar to the output below:

install.go:200: [debug] Original chart version: ""

install.go:217: [debug] CHART PATH: C:\Temp\runner-pool-helm\factory-rm-0.135.1

client.go:134: [debug] creating 1 resource(s)

client.go:134: [debug] creating 6 resource(s)

wait.go:48: [debug] beginning wait for 6 resources with timeout of 5m0s

ready.go:277: [debug] Deployment is not ready: runner-pool/factory-rm-kubernetes-runner-pool. 0 out of 1 expected pods are ready

NAME: my-release

LAST DEPLOYED: Tue Aug 29 16:23:52 2023

NAMESPACE: runner-pool

...TRUNCATED...

NOTES:

You have successfully deployed Sophos Factory Runner Manager!

Your new runner manager should show active status shortly.

If this deployment has replaced a previous instance of runner manager, it may take up to 5 minutes for the previous manager to be recycled.

Next, check to see if the runner-pool container has been deployed by running the following command:

kubectl get pods --namespace runner-pool

You can tail the console output of the runner-pool pod by using the name of the container from the previous command in the next command:

kubectl logs factory-rm-self-hosted-runner-5857cb9ddb-rrhsh --follow --namespace runner-pool

If everything is running smoothly, the pod console output should look something like this:

2023-08-29 22:23:56 info: --- Processing Tick #1 ---

2023-08-29 22:23:56 warn: Missing instanceID - self-hosted Runner Pool detected.

2023-08-29 22:23:56 info: --- Tick #1 Completed ---

2023-08-29 22:24:06 info: --- Processing Tick #2 ---

2023-08-29 22:24:06 info: --- Tick #2 Completed ---

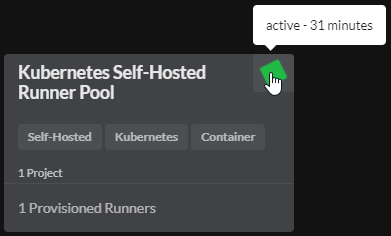

And the Sophos Factory the runner pool should show a spinning green square with a status of active: